How Apple Uses Deep Learning to Fix Siri’s Robotic Voice

Earlier this year, Apple introduced the “Apple Machine Learning Journal,” a blog detailing Apple’s work on machine learning, AI, and other related topics. The blog is written entirely by Apple’s engineers, and gives them a way to share their progress and interact with other researchers and engineers.

Now, publishing a new round of papers on its machine learning journal, Apple showed off how its AI technology has improved the voice of its Siri digital assistant. The paper describes Siri and Apple Maps’ deep learning-based system for synthesizing speech, which gives their voices “naturalness, personality and expressivity.”

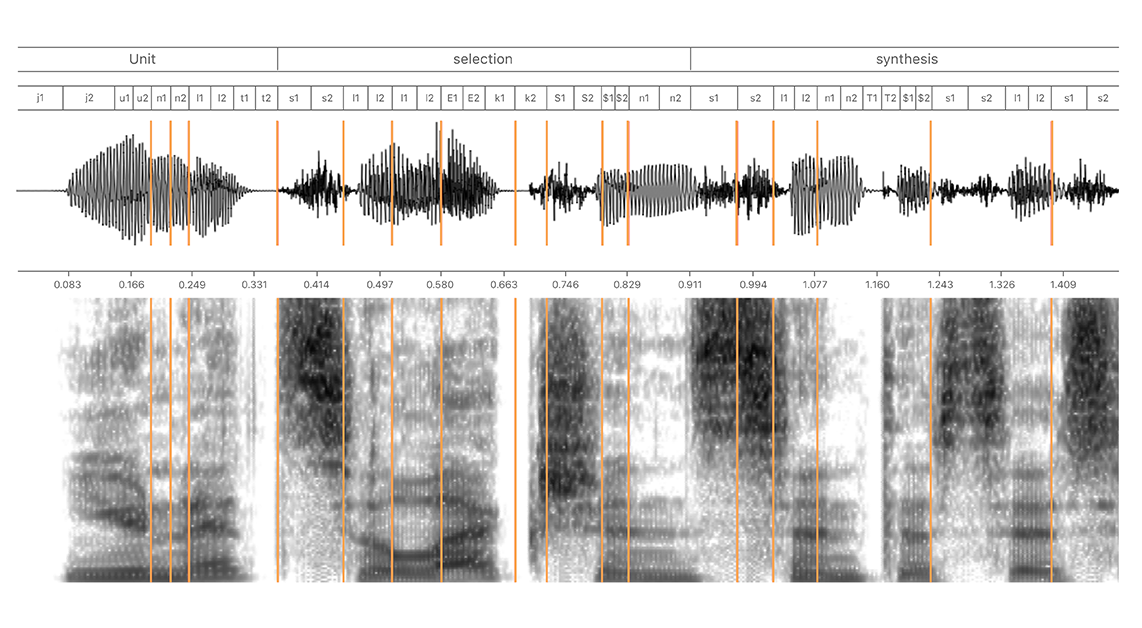

For iOS 11, the engineers at Apple worked with a new female voice actor to record 20 hours of speech in US English and generate between 1 and 2 million audio segments, which were then used to train a deep learning system. The team noted in its paper that test subjects greatly preferred the new version over the old one found in iOS 9 from back in 2015.

Like its biggest competitors, Apple uses rapidly evolving technology called machine learning for making its computing devices better able to understand what we humans want and better able to supply it in a form we humans can understand. A big part of machine learning these days is technology called neural networks that are trained with real-world data — thousands of labeled photos, for example, to build an innate understanding of what a cat looks like.

The results are very noticeable. Siri’s navigation instructions, responses to trivia questions and ‘request completed’ notifications sound a lot less robotic than they did two years ago. One can hear for themselves at the end of this paper from Apple.

The other two blog posts today titled “Improving Neural Network Acoustic Models by Cross-bandwidth and Cross-lingual Initialization” and “Inverse Text Normalization as a Labeling Problem” were also published by Apple’s Siri team. One post details how Siri uses machine learning to display things like dates, times, addresses and currency amounts in a nicely formatted way, and the other techniques Apple uses to make introducing a new language as smooth as possible.