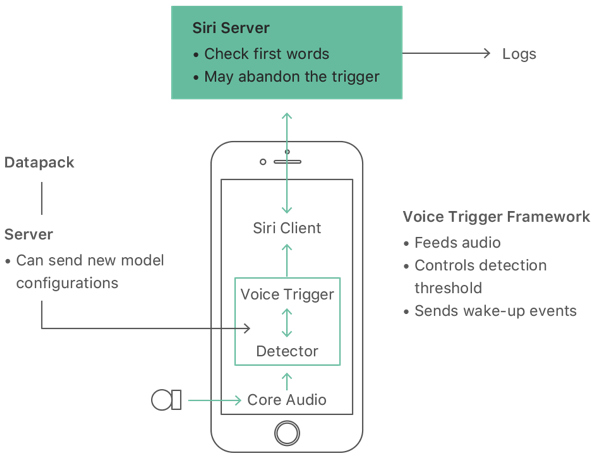

Apple Details How ‘Hey Siri’ Works Using Machine Learning

In its Machine Learning Journal this month, Apple has shared some interesting details about what happens behind-the-scenes when you use the ‘Hey Siri’ feature on your iPhone and Apple Watch. Apple notes that the microphone on your iPhone and Apple Watch “turns your voice into a stream of instantaneous waveform samples, at a rate of 16000 per second”, before the detector decides whether you intended to invoke Siri with your voice.

Apple also highlights that ‘Hey Siri’ relies on the co-processor in iPhones to listen for the trigger word without requiring physical interaction or eating up battery life, and the Apple Watch treats ‘Hey Siri’ differently as it requires having the display on. Furthermore, ‘Hey Siri’ only uses about 5% of the compute budget using this method.

It seems that Apple also has a variable threshold for deciding whether or not you’re trying to invoke Siri:

“We built in some flexibility to make it easier to activate Siri in difficult conditions while not significantly increasing the number of false activations. There is a primary, or normal threshold, and a lower threshold that does not normally trigger Siri. If the score exceeds the lower threshold but not the upper threshold, then it may be that we missed a genuine “Hey Siri” event. When the score is in this range, the system enters a more sensitive state for a few seconds, so that if the user repeats the phrase, even without making more effort, then Siri triggers. This second-chance mechanism improves the usability of the system significantly, without increasing the false alarm rate too much because it is only in this extra-sensitive state for a short time.”

You can read the full article at this link.