Google Tags Dad as Criminal After Son’s Medical Pics Sent to Doctor

Google terminated a San Francisco resident's account after misidentifying photos he took of his son's groin for the doctor as child sexual abuse material.

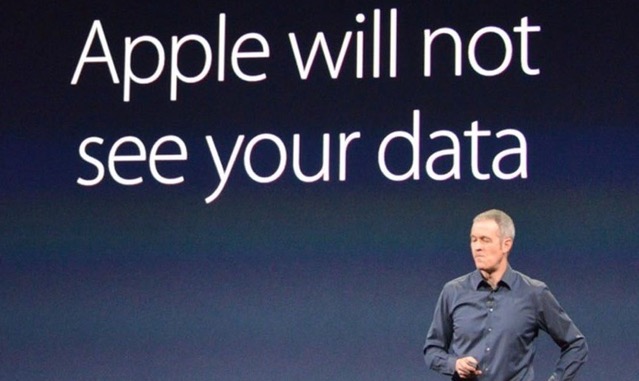

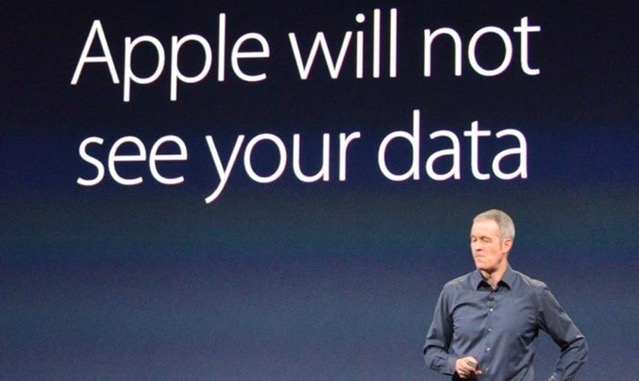

Collision in New Child Abuse Detection Algorithm ‘Not a Concern’ Says Apple

Researchers have discovered a collision in Apple’s child-abuse hashing system.

Apple Said to Introduce New Photo Identification Features to Detect Child Abuse

The features will use hashing algorithms to match the content of photos in users’ photo libraries.

Apple Starts Scanning iCloud Photos to Check for Child Abuse

The company will be screening all images backed up to its online storage service.

Apple Pre-Screening Uploaded Content for Child Abuse Imagery

Apple says it does so to protect its services for the benefit of all users.