Apple Drops its Plans to Scan User Photos for CSAM

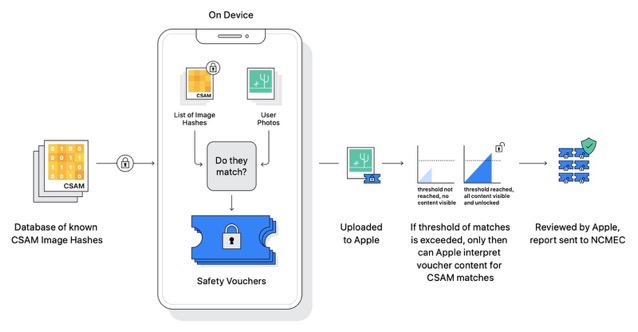

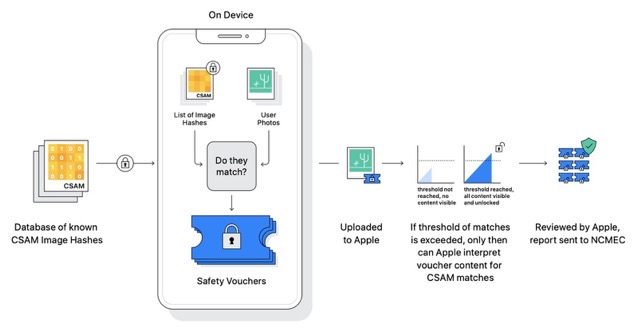

The CSAM scanning tool was meant to be privacy-preserving and allow the company to flag potentially problematic and abusive content.

Apple Needs to Drop CSAM Detection Plans Entirely, Says Digital Rights Group

Apple must scrap data surveillance plans to maintain its promises of user privacy.

Apple Confirms It’s Been Scanning iCloud Mail for CSAM Since 2019

The Cupertino company has confirmed that they have actually been scanning iCloud Mails for CSAM content since at least 2019.

OpenMedia Petition Urges Apple to Scrap CSAM Detection System

Activist group says Apple is going back on its promise to protect user privacy.

Over 90 Activist Groups Ask Apple to Abandon CSAM Detection System

Privacy advocates are concerned the system "will be used to censor protected speech" and "threaten the privacy and security of people around the world".

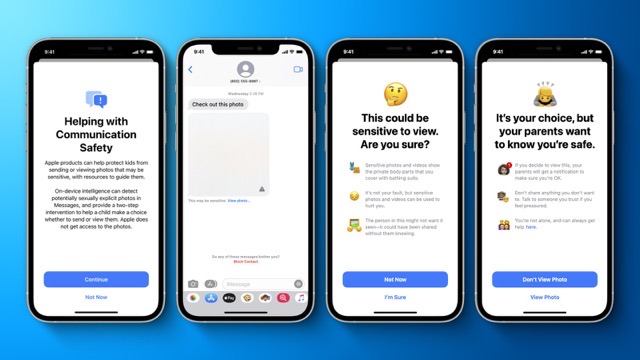

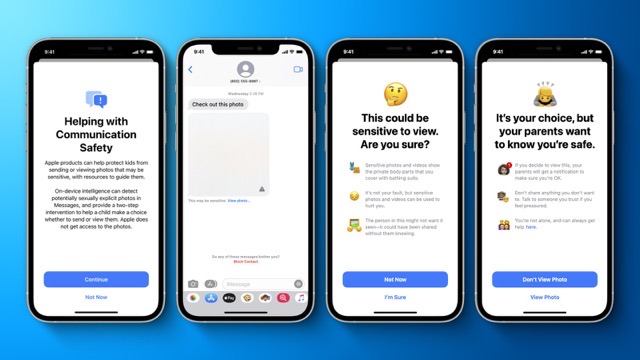

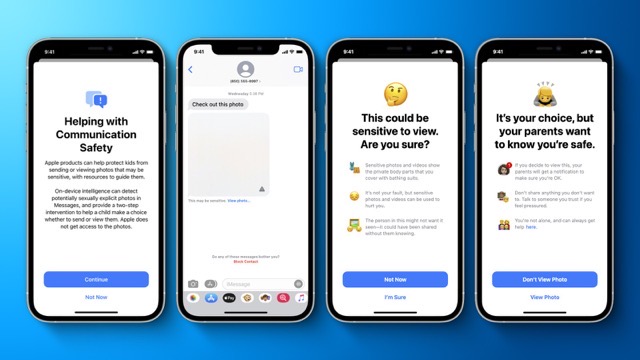

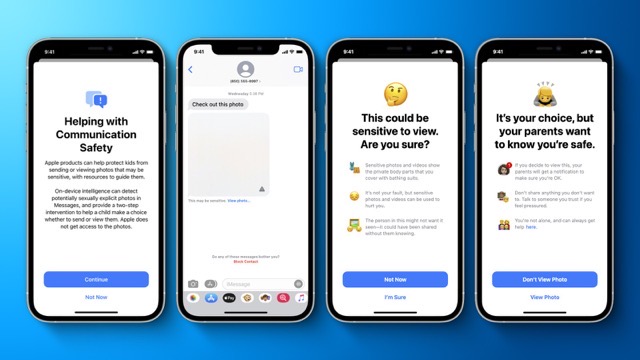

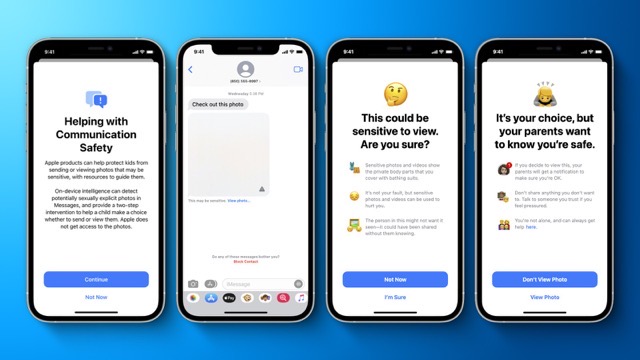

Apple Details its CSAM Detection System’s Privacy and Security

Apple has just provided more detailed overview of its new child safety features.

Apple Privacy Head Addresses Concerns About New CSAM Detection System

Erik Neuenschwander talks about the new features launching for Apple devices.

WhatsApp Says Apple’s CSAM Detection System ‘Very Concerning’

How the tables have turned.

Apple Shares Expansion Plans for its New CSAM Detection System

The CSAM detection system will be limited to the U.S. at launch.