Google Search Introduces New Multitask Unified Model (MUM) Technology

Google Search has just introduced a new technology called Multitask Unified Model, or MUM, which has the potential to transform how Google helps you with complex tasks.

Built on a Transformer architecture, MUM not only understands language, but also generates it. It is trained across 75 different languages and many different tasks at once, allowing it to develop a more comprehensive understanding of information.

According to Google, MUM is multimodal, meaning understands information across text and images and, in the future, can expand to more modalities like video and audio.

For example, there’s really helpful information about Mt. Fuji written in Japanese; today, you probably won’t find it if you don’t search in Japanese. But MUM could transfer knowledge from sources across languages, and use those insights to find the most relevant results in your preferred language.

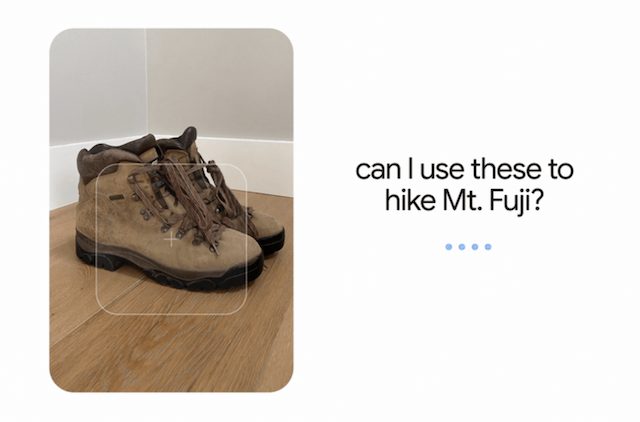

“You might be able to take a photo of your hiking boots and ask, “can I use these to hike Mt. Fuji?” MUM would understand the image and connect it with your question to let you know your boots would work just fine. It could then point you to a blog with a list of recommended gear.”

Google will bring MUM-powered features and improvements to its products in the coming months and years.