Apple Removes References to CSAM Scanning Feature From Child Safety Website [Update]

Apple appears to have scrubbed any reference to its controversial Child Safety plans to scan iCloud Photo libraries for Child Sexual Abuse Material (CSAM).

As noted by MacRumors, Apple has removed all mentions of CSAM from its Child Safety website, suggesting its controversial plan to detect child sexual abuse images on iPhones and iPads may hang in the balance following significant criticism of its methods.

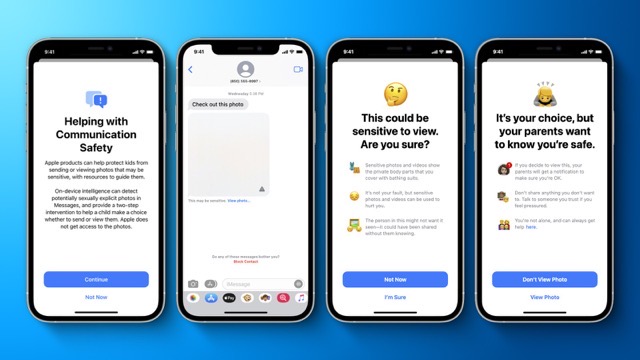

Apple in August announced its planned new suite of child safety features, which included a feature to scan users’ iCloud Photos libraries for CSAM. The feature would have used on-device machine learning to warn about sensitive content and to allow parents to play a more informed role in helping their children navigate the world of online communication.

The system used a database of hashes pertaining to known CSAM material, and wouldn’t have involved actually looking at or scanning any user photos, but was met with widespread user outrage and pushback from global figures including Edward Snowden, and various security and privacy experts.

Now, as of December 13, Apple’s Child Safety page now only contains a reference to Communication safety in Messages and expanded guidance for Siri, Spotlight, and Safari Search, the former having debuted on iOS 15 earlier this week.

At the time of delay Apple said in a statement “…based on feedback from customers, advocacy groups, researchers and others, we have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features.”

Update: Apple told The Verge the feature is delayed, not cancelled:

Apple spokesperson Shane Bauer said that the company’s position hasn’t changed since September, when it first announced it would be delaying the launch of the CSAM detection. “Based on feedback from customers, advocacy groups, researchers, and others, we have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features,” the company’s September statement read.