Apple Drops its Plans to Scan User Photos for CSAM

In response to the feedback and guidance it received, Apple has announced that its child sexual abuse material (CSAM) detection tool for iCloud photos has now been permanently dropped, Wired is reporting.

Apple has been delaying its CSAM detection features amid widespread criticism from privacy and security researchers and digital rights groups who were concerned that the surveillance capability itself could be abused to undermine the privacy and security of iCloud users.

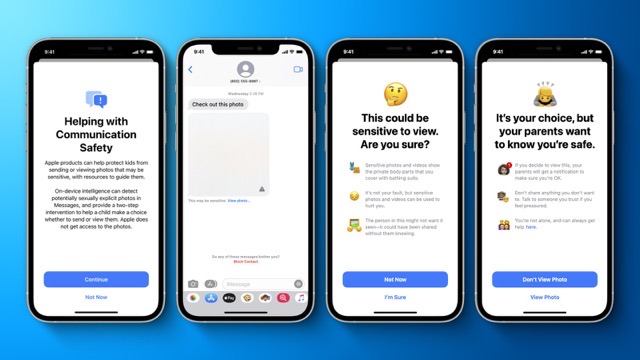

Now, the company is focusing its anti-CSAM efforts and investments on its “Communication Safety” features, which the company announced in August 2021 and launched last December.

Parents and caregivers can opt into the protections through family iCloud accounts. The features work in Siri, Apple’s Spotlight search, and Safari Search to warn if someone is looking at or searching for CSAM and provide resources on the spot to report the content and seek help.

“After extensive consultation with experts to gather feedback on child protection initiatives we proposed last year, we are deepening our investment in the Communication Safety feature that we first made available in December 2021,” Apple said in a statement.

“We have further decided to not move forward with our previously proposed CSAM detection tool for iCloud Photos. Children can be protected without companies combing through personal data, and we will continue working with governments, child advocates, and other companies to help protect young people, preserve their right to privacy, and make the internet a safer place for children and for us all.”

Apple’s CSAM update comes alongside its announcement today that the company is expanding its end-to-end encryption offerings for iCloud, including adding the protection for backups and photos stored on the cloud service.