Apple Pre-Screening Uploaded Content for Child Abuse Imagery

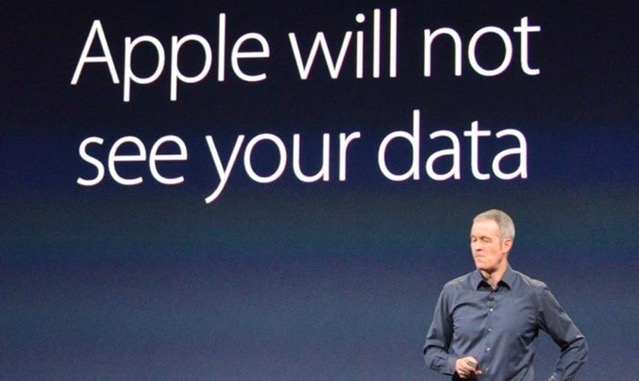

As pointed out by The Mac Observer, Apple has recently updated its privacy policy to reflect that it scans all uploaded content for potentially illegal material, including child sexual exploitation imagery. While the iPhone maker may have been doing this for years, the source notes that this is the first time Apple has revealed it in its privacy policy.

Under the “How we use your personal information” header in Apple’s updated privacy policy, one of the paragraphs now reads as follows:

We may also use your personal information for account and network security purposes, including in order to protect our services for the benefit of all our users, and pre-screening or scanning uploaded content for potentially illegal content, including child sexual exploitation material.

Since Apple notes on its iCloud security page that customer data is encrypted in transit and on iCloud servers, it means that Apple scans the content before it gets encrypted. Here’s what Apple’s legal terms for iCloud say:

You acknowledge that Apple is not responsible or liable in any way for any Content provided by others and has no duty to pre-screen such Content. Apple reserves the right at all times to determine whether Content is appropriate and in compliance with this Agreement, and may pre-screen, move, refuse, modify and/or remove Content at any time, without prior notice and in its sole discretion, if such Content is found to be in violation of this Agreement or is otherwise objectionable.

Now you may ask yourself, how can Apple determine if the content is appropriate or not if it isn’t scanning iCloud content despite it being encrypted? Don’t you think Apple clearly needs to define the word “appropriate”? Don’t you want to know the extent of Apple’s knowledge of general customer content?

Let us know your thoughts in the comments section below.