Apple Previews New Child Safety Features Coming to iOS, macOS

We reported earlier that Apple is expected to soon introduce new photo identification features to combat child abuse and now, Apple has previewed new child safety features coming to iOS and macOS which will be rolling out with software updates later this year (via MacRumors).

The new features, which will be available in the U.S. only at launch, will be enabled for users in Canada and other regions over time, said Apple. Below is a brief rundown of the newly announced child safety features.

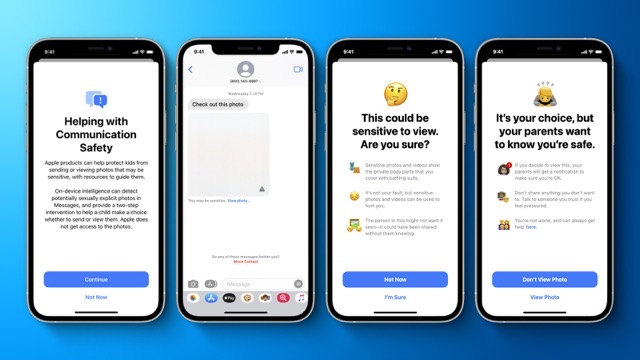

Communication Safety

The Messages app will be getting a new Communication Safety feature to warn children and their parents when receiving or sending sexually explicit photos. The Messages app will use on-device machine learning to analyze image attachments, and if a photo is determined to be sexually explicit, the photo will be automatically blurred and the child will be warned.

Scanning Photos for Child Sexual Abuse Material (CSAM)

Apple will also be able to detect known Child Sexual Abuse Material (CSAM) images stored in iCloud Photos, enabling the company to report these instances to the National Center for Missing and Exploited Children (NCMEC), a non-profit organization that works in collaboration with U.S. law enforcement agencies.

Apple said its method of detecting known CSAM is designed with user privacy in mind. The hashing technology, called NeuralHash, analyzes an image and converts it to a unique number specific to that image, noted Apple.

Expanded CSAM Guidance in Siri and Search

Apple also plans to expand guidance in Siri and Spotlight Search across devices by providing additional resources to help children and parents stay safe online and get help with unsafe situations.

These updates are coming later this year in an update to iOS 15, iPadOS 15, watchOS 8, and macOS Monterey.