Apple Shares Expansion Plans for its New CSAM Detection System

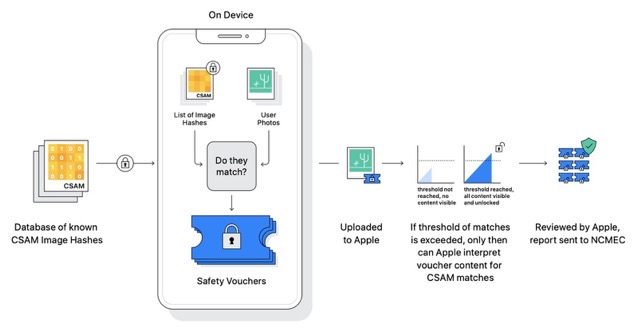

Yesterday, Apple previewed its new child safety features coming to iOS and macOS with software updates later this year, including the Child Sexual Abuse Material (CSAM) system, which has sparked some concerns among some security researchers.

Although Apple said its method of detecting known CSAM is designed with user privacy in mind, researchers say the company could eventually be forced by governments to add non-CSAM images to the hash list for nefarious purposes, such as to suppress political activism.

To address these concerns, Apple has today provided additional information about CSAM and how it plans to extend the detection system to countries other than the U.S. (via MacRumors).

Apple says it will consider any potential global expansion of the system “on a country-by-country basis after conducting a legal evaluation.” The company also addressed the possibility of a particular region in the world “deciding to corrupt a safety organization in an attempt to abuse the system.”

Apple said the system’s first layer of protection is an undisclosed threshold before a user is flagged for having inappropriate imagery. Even if the threshold is exceeded, Apple said its manual review process would serve as an additional barrier and confirm the absence of known CSAM imagery. Apple said it would ultimately not report the flagged user to NCMEC or law enforcement agencies and that the system would still be working exactly as designed.

Apple also highlighted how some parties, such as the Family Online Safety Institute, are praising the company for its efforts to fight child abuse.