Apple Details its CSAM Detection System’s Privacy and Security

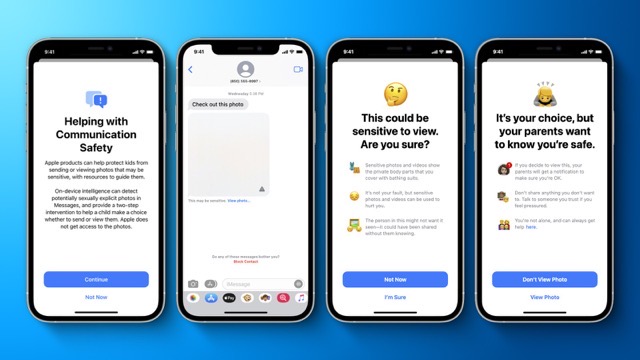

Apple has just provided a more detailed overview of its recently announced child safety features in a new support document, outlining the design principles, security and privacy requirements, and threat model considerations of its CSA detection system (via MacRumors).

The document addresses concerns raised against the company’s plan to detect known Child Sexual Abuse Material (CSAM) images stored in iCloud Photos.

Apple says that on-device database of known CSAM images contains only entries that were independently submitted by two or more child safety organizations operating in separate sovereign jurisdictions and not under the control of the same government.

“The system is designed so that a user need not trust Apple, any other single entity, or even any set of possibly-colluding entities from the same sovereign jurisdiction (that is, under the control of the same government) to be confident that the system is functioning as advertised. This is achieved through several interlocking mechanisms, including the intrinsic auditability of a single software image distributed worldwide for execution on-device.”

Apple also plans to publish a support document on its website containing a root hash of the encrypted CSAM hash database included with each version of every Apple operating system that supports the feature.