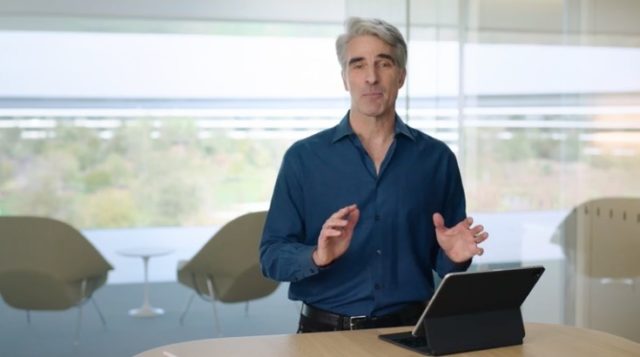

Apple SVP Craig Federighi Acknowledges Confusion Around Apple Child Safety Features

Apple SVP Craig Federighi has discussed in a new interview the reaction to the iCloud Child Safety features announced last week.

Federighi, Apple’s senior vice president of software engineering, emphasized that the new system will be auditable, but did conceded that the tech giant stumbled in last week’s unveiling of two new tools.

The first is aimed at identifying known sexually explicit images of children stored in the company’s cloud storage service and the second will allow parents to better monitor what images are being shared with and by their children through text messages.

“We wish that this had come out a little more clearly for everyone because we feel very positive and strongly about what we’re doing, and we can see that it’s been widely misunderstood,” he told the Wall Street Journal in an interview.

“I grant you, in hindsight, introducing these two features at the same time was a recipe for this kind of confusion,” he continued. “It’s really clear a lot of messages got jumbled up pretty badly. I do believe the soundbite that got out early was, ‘oh my god, Apple is scanning my phone for images.’ This is not what is happening.”

The Apple exec also noted a few new details regarding the system’s safeguards, including the fact that users will need to have around 30 matches for CSAM content in their Photos library before Apple is notified. The company them confirms if the images are indeed real instances of CSAM:

If and only if you meet a threshold of something on the order of 30 known child pornographic images matching, only then does Apple know anything about your account and know anything about those images, and at that point, only knows about those images, not about any of your other images. This isn’t doing some analysis for did you have a picture of your child in the bathtub? Or, for that matter, did you have a picture of some pornography of any other sort? This is literally only matching on the exact fingerprints of specific known child pornographic images.

He also pointed out the security advantage of placing the matching process on the iPhone directly, rather than it occurring on iCloud’s servers.

Federighi also explained the advantages of putting the matching process directly on the device itself rather that on iCloud servers:

Because it’s on the [phone], security researchers are constantly able to introspect what’s happening in Apple’s [phone] software. So if any changes were made that were to expand the scope of this in some way —in a way that we had committed to not doing—there’s verifiability, they can spot that that’s happening.

Overall, Federighi acknowledged the confusion about the tools.

“In hindsight, introducing these two features at the same time was a recipe for this kind of confusion,” he said. “By releasing them at the same time, people technically connected them and got very scared: What’s happening with my messages? The answer is…nothing is happening with your messages.”