Meta AI Unveils ‘Voicebox’ Speech Generation Model

Meta AI researchers have just introduced Voicebox, a ground breaking speech model capable of performing tasks that it was not specifically trained on.

This innovative model showcases state-of-the-art performance in speech generation, marking a significant milestone in the advancement of AI technology.

Voicebox stands out among generative systems for its ability to create diverse audio outputs. Unlike models that generate images or text, Voicebox produces high-quality audio clips, making it a trailblazer in the realm of speech synthesis.

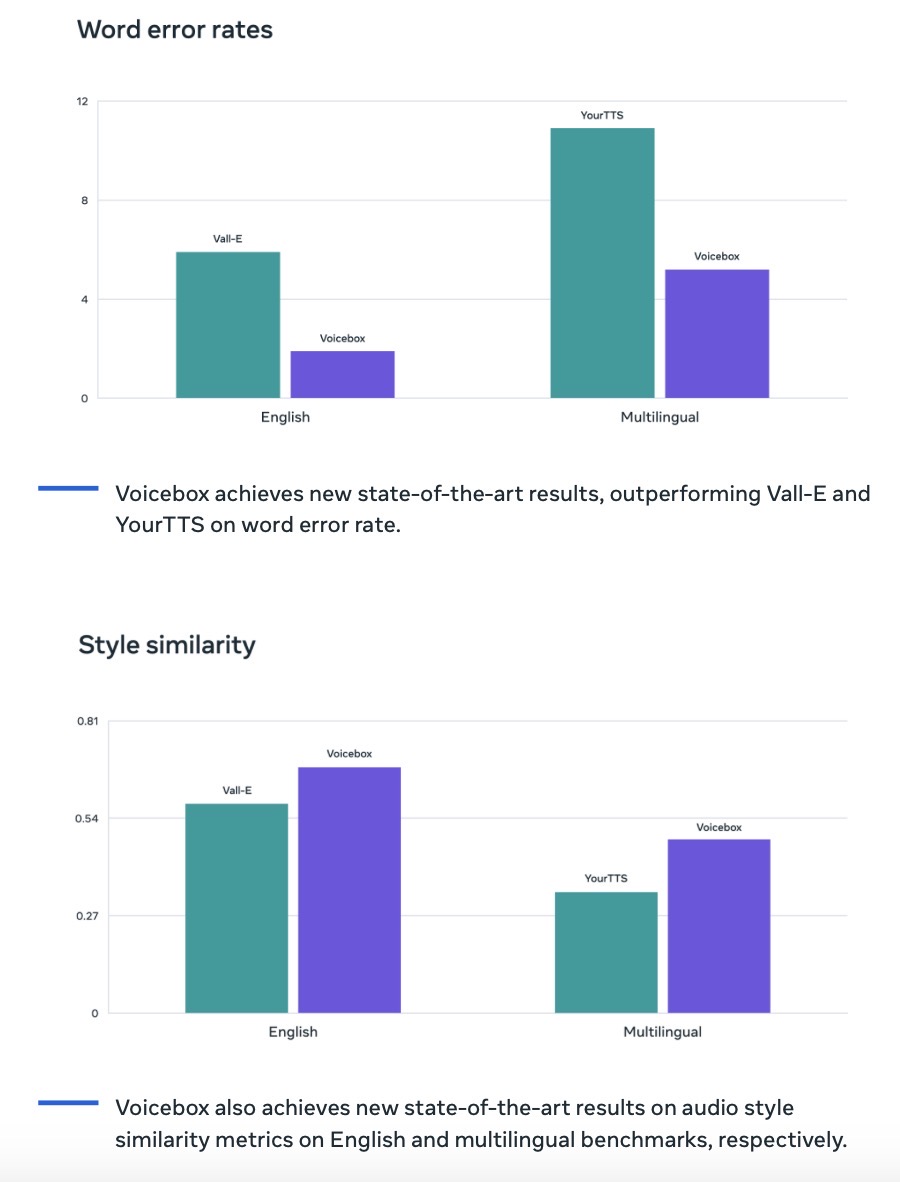

With proficiency in six languages, the model excels in tasks such as noise removal, content editing, style conversion, and the generation of various speech samples.

At the core of Voicebox lies a groundbreaking methodology known as Flow Matching. This approach, which surpasses diffusion models, has propelled the model’s performance to outshine the current leading English model, VALL-E, in zero-shot text-to-speech tasks.

The development of Voicebox involved extensive training using over 50,000 hours of recorded speech, which has enabled it to predict speech segments based on the surrounding audio and segment transcripts.

Leveraging its contextual understanding, the model can seamlessly generate speech portions within an audio recording without the need to recreate the entire input, showcasing its remarkable versatility.

Although generative speech models like Voicebox present numerous exciting possibilities, Meta AI acknowledges the potential risks associated with their misuse.

As a result, the Voicebox model and its underlying code will not be made publicly available at this time.

For further insight into Meta AI’s progress, interested individuals can access the research paper available at this link.