ChatGPT Can Now ‘See, Hear and Speak’ in Major Update

OpenAI has announced the rollout of new voice and image capabilities for ChatGPT, offering a more intuitive user interface. The update allows for voice conversations and visual interactions, enhancing the ways ChatGPT can be utilized in daily life.

The new features enable users to engage in voice conversations with ChatGPT and share images for a more interactive experience. “Voice and image give you more ways to use ChatGPT in your life,” OpenAI stated on Monday.

Users can snap pictures of landmarks or their pantry to discuss various topics, from travel to meal planning. The voice feature is available on iOS and Android platforms, while image sharing is accessible across all platforms. To initiate voice features, navigate to Settings, then select New Features within the mobile app. Opt for voice conversations and tap the headphone icon situated at the top-right of the home screen. Users can select from five available voice options.

The voice capability is powered by a new text-to-speech model and uses OpenAI’s open-source speech recognition system, Whisper, to transcribe spoken words into text.

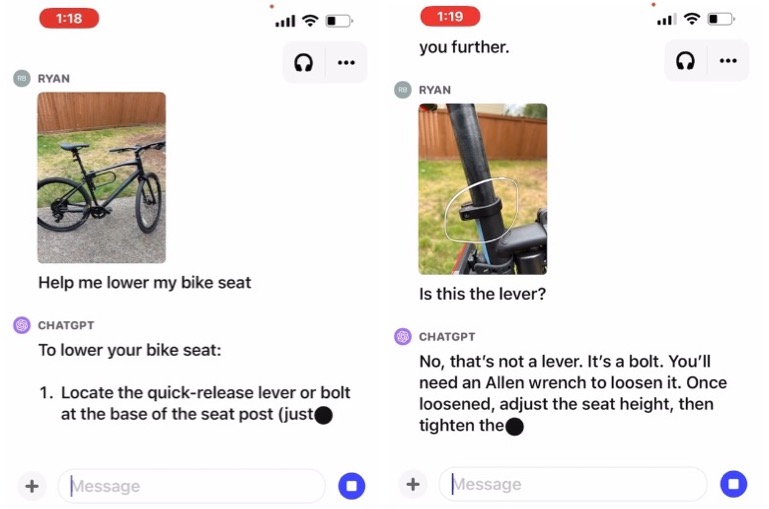

Image understanding is facilitated by multimodal GPT-3.5 and GPT-4 models, which apply language reasoning skills to a wide range of images, such as photographs and screenshots. An example was provided showing how submitting images of a bike and asking how to adjust the seat resulted in ChatGPT giving specific instructions and even offering corrections when the users asks a question. Really impressive stuff.

OpenAI emphasized its commitment to safety and responsible usage, citing collaborations with Be My Eyes, a mobile app for blind and low-vision people. “We’ve also taken technical measures to significantly limit ChatGPT’s ability to analyze and make direct statements about people,” OpenAI added. The company has been transparent about the model’s limitations, especially in fields like research and languages with non-Roman scripts.

The new features will be initially available to paid Plus and Enterprise users over the next two weeks, with plans to expand access to other user groups, including developers, in the near future. OpenAI aims to refine the technology based on real-world usage and feedback.

Our Skynet overlords are just around the corner, folks.