Apple’s iMessage to Stay as UK Drops Anti-Encryption Bill

The UK has decided to postpone the use of contentious powers proposed in an online safety bill, which would scan messaging apps for harmful content, until a viable technology is developed. Critics have continuously voiced concerns that these measures pose significant threats to user privacy.

Addressing the House of Lords, Lord Stephen Parkinson, the junior arts and heritage minister, emphasized that the tech regulator, Ofcom, would instruct companies to scan their networks only when a suitable technology, capable of executing such tasks, becomes available. Security experts, however, speculate that it might take years before such a technology emerges, if at all.

Lord Parkinson further clarified, “A notice can only be issued where technically feasible and when technology is accredited as meeting minimum standards of accuracy in detecting only child sexual abuse and exploitation content,” reports the Financial Times.

The online safety bill, undergoing its final phases in parliament, is among the most rigorous endeavours by a government to hold Big Tech companies accountable for the content on their platforms.

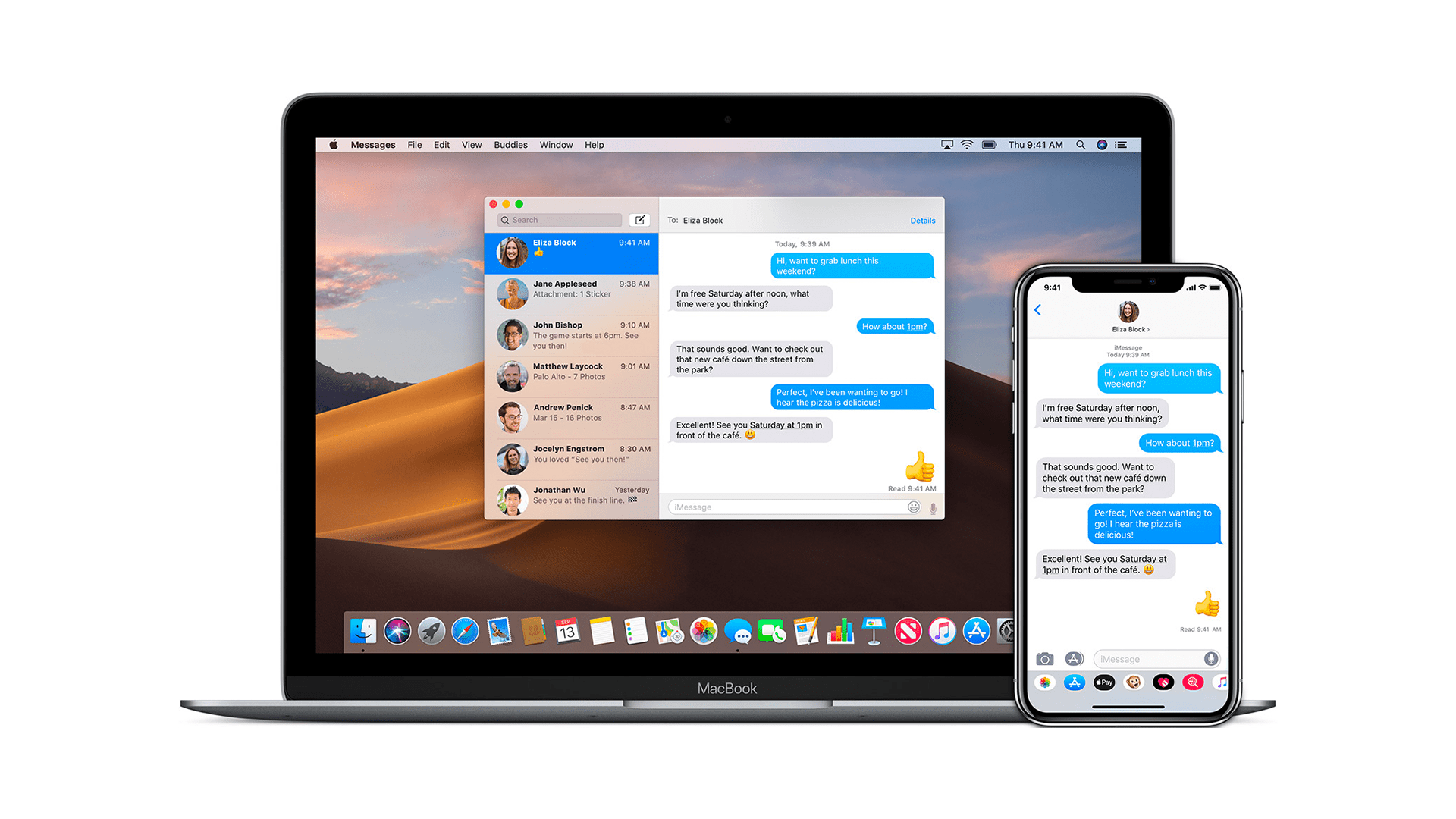

Several social media platforms, including WhatsApp, owned by Facebook’s parent Meta, and Signal, have strongly opposed specific provisions of the bill. These platforms have even warned of a potential exit from the UK market if forced to compromise their encryption standards. Apple said it would remove iMessage and FaceTime from the UK if the law was enacted.

Meredith Whittaker, president of Signal, said the decision was a “victory” for tech companies, albeit not an absolute one. Will Cathcart, head of WhatsApp, maintained the company’s stance on encryption and highlighted the risks to user privacy.

It’s been understood that current technology cannot scan end-to-end encrypted messages without breaching users’ privacy. Despite this, the UK government stated that their stance remains unchanged and, under specific conditions, might still direct companies to develop or use technology to identify and remove illegal child abuse content.

Child safety campaigners have been urging the government for a stricter stance against tech companies concerning abusive materials shared on apps. Richard Collard, leading child safety online policy at the NSPCC, emphasized the importance of balancing user safety and privacy rights.