Instagram’s Algorithm Found Targeting Adults with Inappropriate Videos

Instagram’s Reels, a platform designed to present users with short videos, has raised concerns over its algorithm delivering unsuitable content to adults following children on the platform.

[Image: The Wall Street Journal]

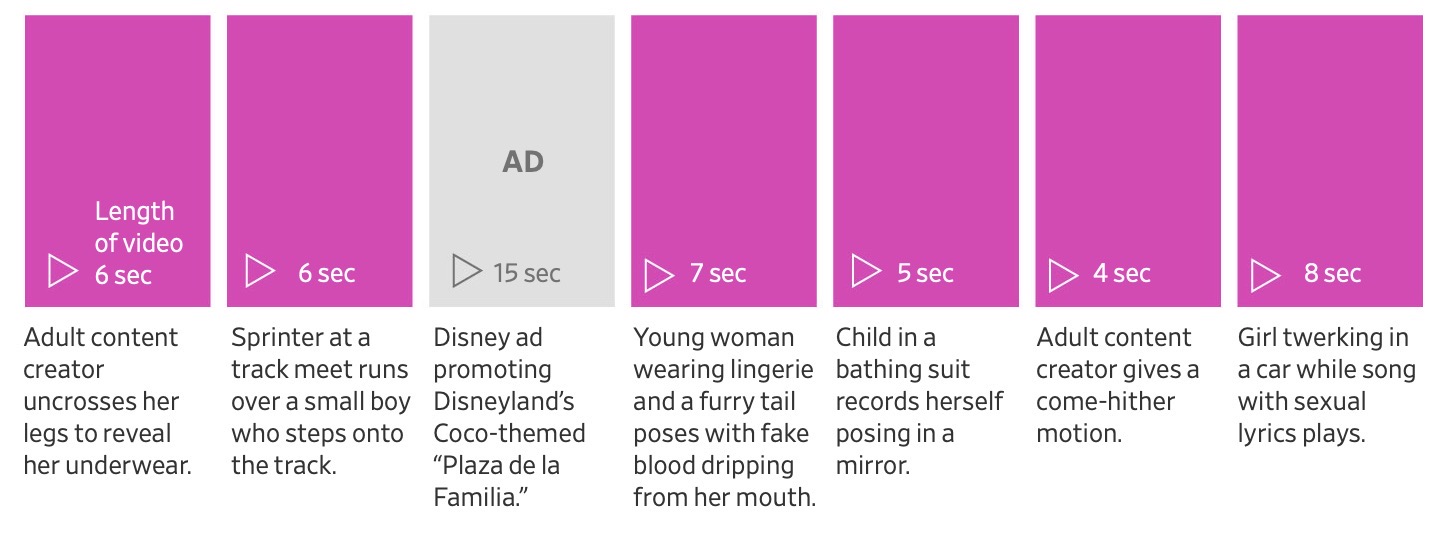

The Wall Street Journal conducted tests revealing a disturbing mix of content being served to test accounts.

The test accounts were configured to follow young influencers like gymnasts and cheerleaders, triggering Instagram’s algorithm to recommend inappropriate videos.

Content suggested by Instagram included risqué footage of children and overtly sexual adult content, interspersed with ads from major U.S. brands.

Observing the substantial adult male following of these young influencers, the Journal set up test accounts, followed similar users, and found even more distressing content showcased alongside ads.

In a concerning sequence, advertisements from major brands like Bumble and Pizza Hut appeared alongside inappropriate content, raising questions about Instagram’s content algorithms and ad placement practices.

Meta, Instagram’s parent company, defended its platform, stating that the Journal’s tests represented a manufactured experience not reflective of the experience of billions of users.

However, it declined to comment on the specifics of the mixed video streams featuring children, sex, and advertisements.

The company stated it has introduced new brand safety tools and removes millions of videos suspected of violating standards monthly.

Following the Journal’s previous report on pedophilic content connected through Meta’s algorithms, Meta claimed to have taken down tens of thousands of suspicious accounts monthly and joined a coalition to combat child exploitation.

Numerous well-known brands, including Disney, Walmart, and Hims, whose ads appeared adjacent to inappropriate content in the tests, expressed concerns and took actions such as halting advertising on Meta’s platforms.

The test accounts revealed disturbing videos of children and adult content, leading experts to criticize Instagram’s algorithms for directing such content to users who show interest in young content creators.

Former Meta employees admitted the platform’s algorithmic tendencies to aggregate inappropriate content and the difficulty in curtailing it due to limitations in content detection systems.

The Canadian Centre for Child Protection has also found continued instances of unsuitable content served on Instagram, raising alarms about the platform’s impact on child safety online.

As advertisers pull back their support, questions remain about Meta’s ability to effectively restrict and prevent the promotion of harmful content on its apps.