Apple Privacy Head Addresses Concerns About New CSAM Detection System

Apple has recently previewed a handful of upcoming new child safety features for both iOS and macOS, including a Child Sexual Abuse Material (CSAM) detection system, which several researchers and companies, including WhatsApp, have labeled as “very concerning.”

In an interview with TechCrunch, Head of Privacy at Apple Erik Neuenschwander has shared detailed answers to many of these concerns. Neuenschwander said that Apple has been working at this for some time, including the current state of the art techniques, which mostly involve scanning through entire contents of users libraries.

Below are some of the highlights from the lengthy interview.

“We have two co-equal goals here. One is to improve child safety on the platform and the second is to preserve user privacy, And what we’ve been able to do across all three of the features.”

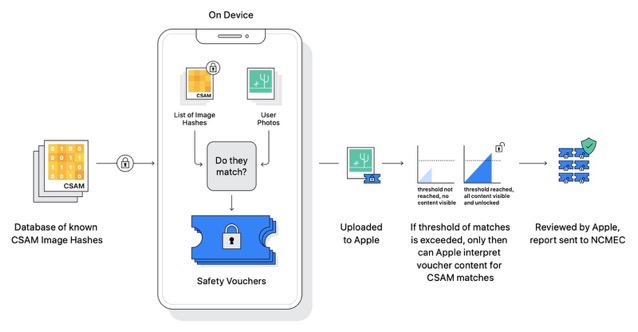

“Our system involves both an on-device component where the voucher is created, but nothing is learned, and a server-side component, which is where that voucher is sent along with data coming to Apple service and processed across the account to learn if there are collections of illegal CSAM.”

“Well first, that is launching only for US, iCloud accounts, and so the hypotheticals seem to bring up generic countries or other countries that aren’t the US when they speak in that way, and the therefore it seems to be the case that people agree US law doesn’t offer these kinds of capabilities to our government.”

You can read the interview in its entirety at the source page.