Samsung Claps Back on ‘Fake Moon’ Photography Controversy

Samsung has finally spoken out on the ongoing controversy over the heavily processed Moon images its smartphones capture, publishing a blog post on Wednesday with its official response.

Last week, a viral Reddit post demonstrated how much extra detail Samsung’s smartphone camera software computationally adds to any images it detects are of the Moon.

Samsung advertises the “space zoom” feature on some of its phones, like the flagship Galaxy S-series and the foldable Galaxy Z-series, as being capable of capturing high-quality images of the Moon. However, Reddit user ibreakphotos snapped a photo of an artificially blurred image of the Moon and showed how Samsung’s computational photography system added too much extra detail — which didn’t even exist in the original — while processing it.

You can check out a side-by-side comparison of the two images below:

Image: Reddit user “ibreakphotos”

The Reddit exposé led to much uproar over the techniques Samsung is using to identify when a user is trying to capture an image of the Moon and apply heavy processing to it. It also sparked a debate as to whether the final image the user sees is a different image altogether than what they snapped, with many accusing Samsung of misleading marketing.

Samsung’s blog post serves as a direct response to the Reddit post and the claims that have been brought forth in its wake. In it, the South Korean tech giant explains how its “Scene Optimizer” feature, which has enabled Moon photography on its phones since the Galaxy S21 lineup, combined several techniques to improve photos of the Moon.

According to Samsung, the first rung on the ladder is the company’s Super Resolution feature, which is triggered at 25x zoom (or higher) and uses “Multi-frame Processing” to combine more than 10 images to reduce noise and enhance clarity. Super Resolution also optimizes the camera’s exposure, while the “Zoom Lock” feature stabilizes the image to reduce blur.

As for how Samsung’s phones determine they are looking at the Moon in the first place, the company said its software uses an “AI deep learning model” that’s been “built based on a variety of Moon shapes and details, from full through to crescent Moons, and is based on images taken from our view from the Earth.”

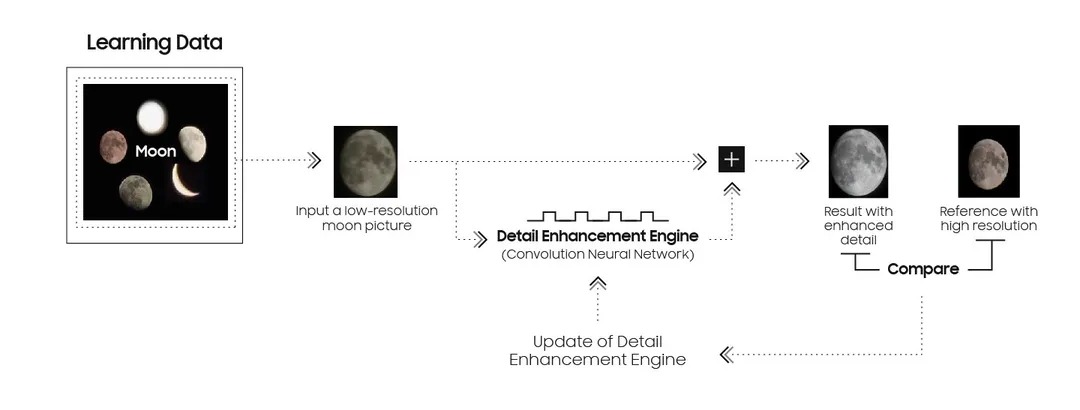

The process doesn’t stop there, though. Samsung said that its camera software also leverages a “deep-learning-based AI detail enhancement engine” built into Scene Optimizer to further improve the image and clean up the details.

After Multi-frame Processing has taken place, Galaxy camera further harnesses Scene Optimizer’s deep-learning-based AI detail enhancement engine to effectively eliminate remaining noise and enhance the image details even further.

Samsung shared a flow chart of the entire process, which you can check out below:

Image: Samsung

Samsung has been marketing high-quality Moon photography as a feature in its phones for years now, and this is far from the first its methods have been criticized.

However, the controversy has garnered much more traction this time around, with several popular YouTubers like Marques “MKBHD” Brownlee and Mrwhosetheboss talking about it. You can check out their videos on what’s going on below:

What’s more, the information in Samsung’s recent blog post — it’s actually a lightly edited translation of an article the company posted in Korean last year. To learn more, check out Samsung’s full blog post, where the company also explains how Scene Optimizer processes a deliberately blurred image of the moon like the one in the spicy Reddit post.

Are you satisfied with Samsung’s explanation? Let us know what you think in the comments below.