U.S. Watchdog Slams Tesla for ‘Completely Inadequate’ Autopilot, also Blames Apple

During a hearing on Tuesday about a March 2018 Tesla crash that resulted in the driver’s death due to misuse of the Autopilot feature, the NTSB said that Tesla needs to do more to improve the safety of its Autopilot feature.

According to a new report from CNBC, Walter Huang, an Apple engineer and game developer, was killed in 2018 in Mountain View, California, whilst driving a Tesla Model X. An investigation into the crash found that he had been in the car whilst Tesla’s Autopilot feature was engaged and that he was gaming on a work-issued device when the accident happened.

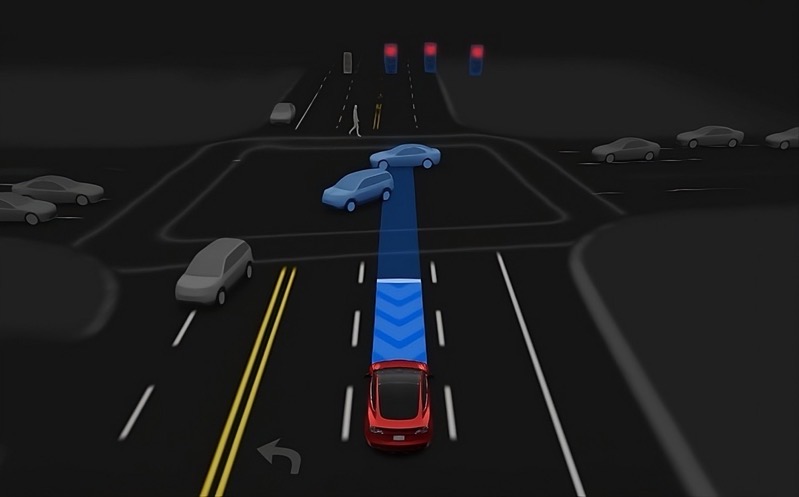

In terms of driver distraction, the report found that the Tesla Autopilot system did not provide an effective means of monitoring the driver’s level of engagement and that alerts in place were insufficient to elicit a response to prevent the crash or mitigate its severity. “Tesla needs to develop applications that more effectively sense the driver’s level of engagement and that alert drivers who are not engaged,” the report noted.

The report attacked Autopilot on two fronts. The first is that Autopilot is being used by drivers outside the vehicle’s operational design domain and, despite knowing this, Tesla does not restrict where Autopilot can be used.

In a statement, NTSB Chairman Robert Sumwalt criticized Apple for not having a policy that prevents employees from using their iPhones while driving.

Let me circle back to the issue of driver distraction – one that involves the role of employers. Employers have a critical role in fighting distracted driving. At the NTSB, we believe in leading by example. Over a decade ago, under the leadership of my former colleague and NTSB chairman, Debbie Hersman, NTSB implemented a broad-reaching policy which bans using Personal Electronic Devices (PEDs) while driving. We know that such policies save lives.

The driver in this crash was employed by Apple – a tech leader. But when it comes to recognizing the need for a company PED policy, Apple is lagging because they don’t have such a policy.

The second part found that Autopilot’s crash avoidance assist systems were not designed to and hence did not detect the crash attenuator. In not detecting the risk, the vehicle is said to have accelerated and did not provide an alert, and the emergency braking didn’t activate. “For partial driving automation systems to be safely deployed in a high-speed operating environment, collision avoidance systems must be able to effectively detect potential hazards and warn of potential hazards to drivers.”

“If you own a car with partial automation, you do not own a self-driving car,” said Sumwalt. “So don’t pretend that you do. This means that when driving in the supposed self-driving mode, you can’t sleep. You can’t read a book. You can’t watch a movie or TV show. You can’t text. And you can’t play video games.”

Tesla’s Autopilot has come into question before, but mostly regarding drivers’ actions rather than Tesla’s technology. Drivers have fallen asleep behind the wheel, letting their Tesla model take control through the Autopilot feature, but the company cautions that this is definitely not what drivers should be doing.

“While using Autopilot, it is your responsibility to stay alert, keep your hands on the steering wheel at all times, and maintain control of your car. Before enabling Autopilot, the driver first needs to agree to ‘keep your hands on the steering wheel at all times’ and to always ‘maintain control and responsibility for your vehicle,'” Tesla’s support page on Autopilot reads.