Apple Starts Scanning iCloud Photos to Check for Child Abuse

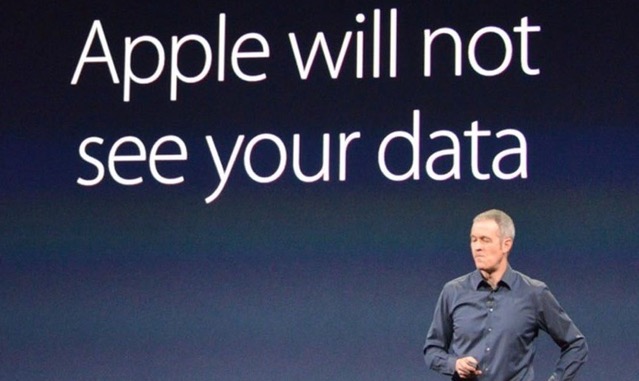

Last year, Apple made some changes to its privacy policy noting that it may scan iCloud images for child abuse material and now, the company’s chief privacy officer Jane Horvath has revealed that every image backed up to the company’s online storage service is being automatically scanned for illegal photos (via The Telegraph).

While speaking at the CES 2020 in Las Vegas, Horvath said that the company is “utilizing some technologies to help screen for child sexual abuse material” without removing any encryption.

Although Horvath did not detail how Apple is checking for child abuse images, many tech companies including Facebook, Twitter, and Google use a system called PhotoDNA in which images are checked against a database of previously identified images:

“Apple is dedicated to protecting children throughout our ecosystem wherever our products are used, and we continue to support innovation in this space.”

“As part of this commitment, Apple uses image-matching technology to help find and report child exploitation. Much like spam filters in email, our systems use electronic signatures to find suspected child exploitation. Accounts with child exploitation content violate our terms and conditions of service, and any accounts we find with this material will be disabled.”

Horvath also defended Apple’s decision to encrypt iPhones in a way that makes it difficult for security services to unlock them.