Lawyer Uses ChatGPT in Court, Cases Turn Artificial Instead of Intelligent

New York attorney Steven Schwartz, an experienced litigator with the firm Levidow, Levidow & Oberman, recently faced a setback due to his use of OpenAI’s artificial intelligence tool, ChatGPT, in a negligence lawsuit against Colombia’s Avianca airlines.

The controversial case revolves around the AI program generating false judicial decisions which Schwartz unknowingly presented in his research brief, reports CNN. Wait, what?!

Roberto Mata, Schwartz’s client, claimed that he was injured by a serving cart on an Avianca flight in 2019 due to the negligence of an airline employee. However, according to Judge Kevin Castel of the Southern District of New York, at least six of the cases Schwartz included in his research brief were “bogus judicial decisions with bogus quotes and bogus internal citations,” allegedly generated by ChatGPT. In other words, ChatGPT just made up the cases and Schwartz didn’t even notice or check.

The cited cases, including Varghese v. China South Airlines and Estate of Durden v. KLM Royal Dutch Airlines among others, appeared to be completely made up by the AI chatbot. Just totally bogus. In an affidavit, Schwartz expressed regret for relying on ChatGPT’s generated content without proper verification, saying that he had never used the AI tool for legal research before this case.

Schwartz is scheduled to appear at a sanctions hearing on June 8. “I greatly regret having utilized generative artificial intelligence to supplement the legal research performed herein and will never do so in the future without absolute verification of its authenticity,” he said.

The issue came to light after lawyers from Condon & Forsyth, representing Avianca, questioned the validity of the cases in a letter to Judge Castel. Peter Loduca, a fellow attorney, asserted that he had trusted Schwartz’s research and had not participated in the research process himself.

Judge Castel has ordered Schwartz to show cause why he shouldn’t face sanctions “for the use of a false and fraudulent notarization.”

Schwartz’s affidavit provided screenshots of his interaction with ChatGPT, in which the chatbot twice confirmed the existence of the non-existent cases. The AI tool claimed that these cases were “real” and available on “reputable legal databases.” But despite this reassurance, Schwartz didn’t bother to fact-check. Ouch.

Both Schwartz and Loduca have yet to comment on the matter. This unprecedented case raises questions about the trustworthiness and potential pitfalls of using AI in legal proceedings.

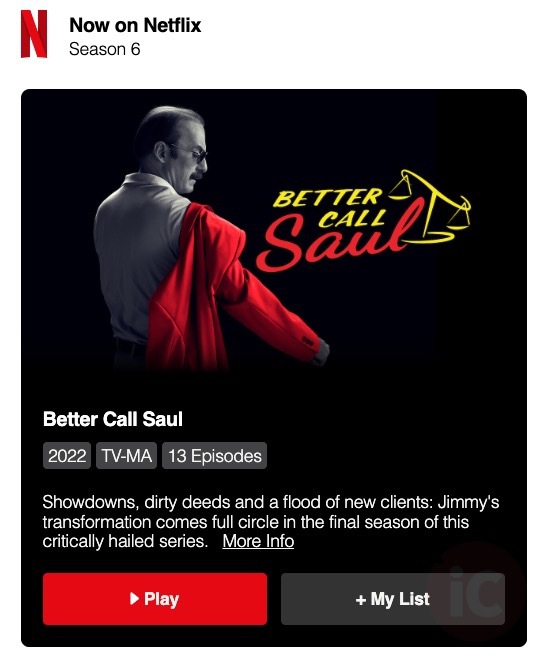

The whole story sounds like it would be an episode in Better Call Saul (now streaming its final season on Netflix Canada). It’s all good, man.