NVIDIA Unveils GH200 Grace Hopper Superchip for Advanced AI Computing

Breaking new ground in the world of advanced computing and generative AI, NVIDIA has taken the stage to announce the cutting-edge GH200 Grace Hopper platform.

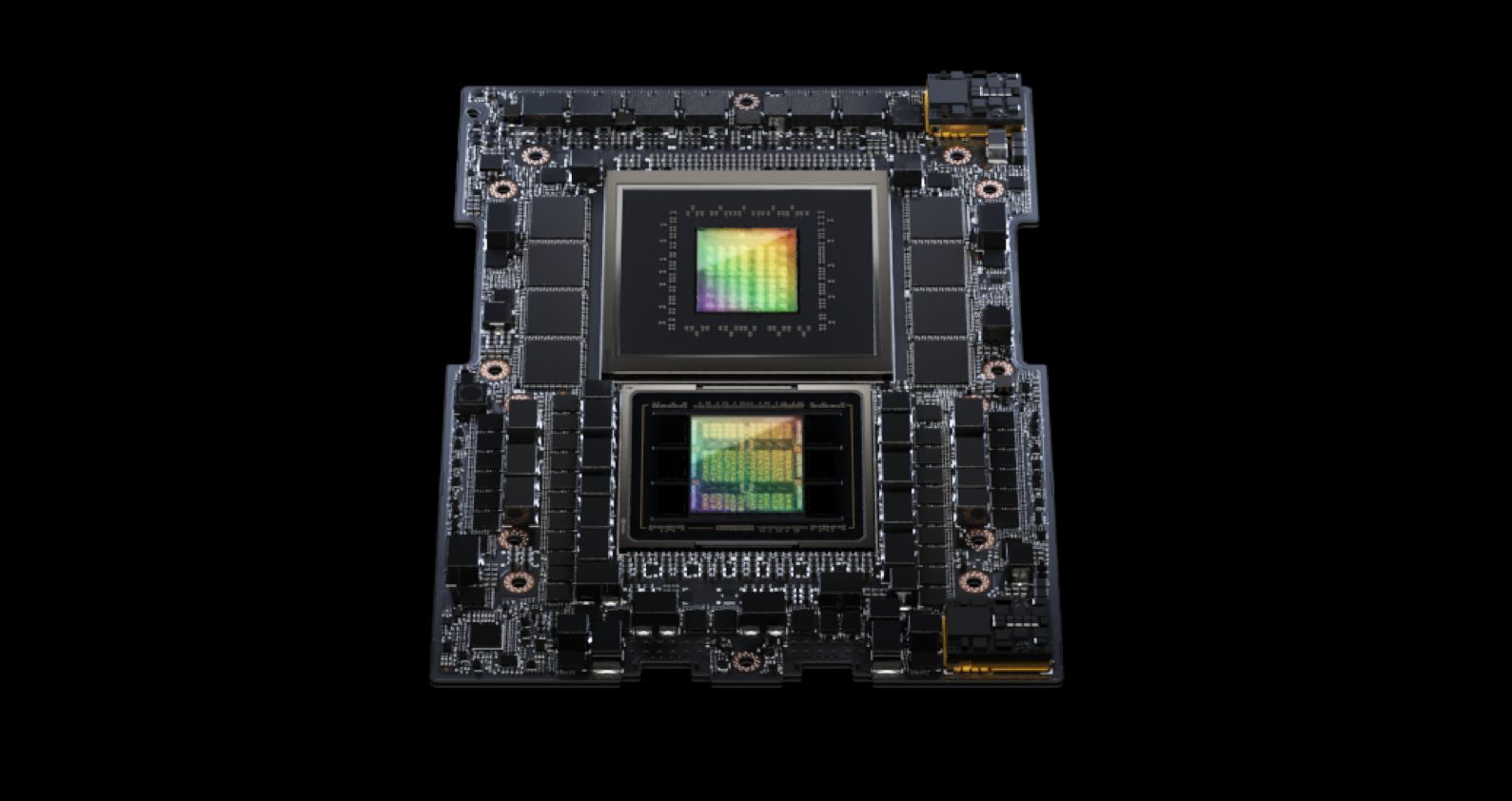

This revolutionary new platform is underpinned by the innovative Grace Hopper Superchip, integrating the world’s first HBM3e processor.

Designed with the intent to manage the most intricate generative AI workloads, the GH200 Grace Hopper platform offers versatile configurations to meet varying requirements.

The dual configuration is a standout feature, boasting an impressive 3.5 times greater memory capacity and a three-fold increase in bandwidth compared to its predecessor.

This arrangement includes a single server equipped with 144 Arm Neoverse cores, delivering an AI performance of eight petaflops, while harnessing the latest HBM3e memory technology with a capacity of 282GB.

Founder and CEO of NVIDIA, Jensen Huang, highlighted the GH200 Grace Hopper Superchip’s remarkable memory technology and bandwidth, emphasizing the capacity for enhanced throughput, seamless GPU connectivity, and easily deployable data center-wide server designs.

At the core of the GH200 platform lies the Grace Hopper Superchip, an innovation that can be interconnected with additional Superchips through NVIDIA’s NVLink technology.

This enables the collaboration of Superchips to deploy the mammoth models essential for generative AI applications.

Leveraging this high-speed, coherent technology empowers the GPU with complete access to CPU memory, resulting in an impressive 1.2TB of fast memory when configured in dual mode.

Anticipating the technology’s broad adoption, several leading manufacturers have already embraced systems based on the previously unveiled Grace Hopper Superchip.