EU Proposes New Child Abuse Content Detection in Chat Apps

According to The Verge, the European Commission has proposed new rules that would require messaging apps and other private platforms to scan users’ encrypted messages for child sexual abuse material (CSAM) and communication that constitutes “grooming” a minor or the “solicitation of children.”

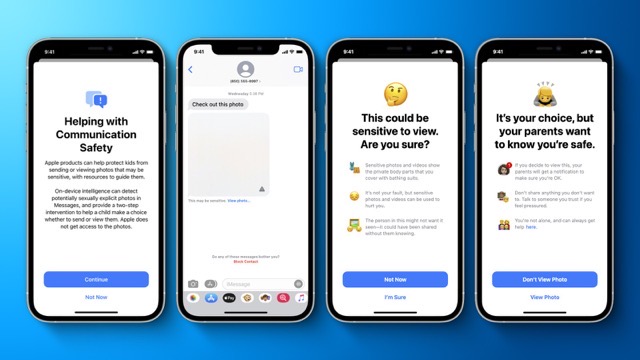

A draft of the proposal, leaked earlier this week, was poorly received by privacy experts. Critics said the EU’s plans are even more invasive than the CSAM detection system Apple proposed and almost implemented last year, but ultimately delayed indefinitely after apps like WhatsApp and more than 90 activist groups all pushed back against it.

“This document is the most terrifying thing I’ve ever seen,” cryptography professor Matthew Green said in a tweet. “It describes the most sophisticated mass surveillance machinery ever deployed outside of China and the USSR. Not an exaggeration.”

This document is the most terrifying thing I’ve ever seen. It is proposing a new mass surveillance system that will read private text messages, not to detect CSAM, but to detect “grooming”. Read for yourself. pic.twitter.com/iYkRccq9ZP

— Matthew Green (@matthew_d_green) May 10, 2022

“This looks like a shameful general #surveillance law entirely unfitting for any free democracy,” said Jen Penfrat, a member of the digital advocacy group European Digital Rights (EDRi).

If passed, the regulation will call for “online service providers,” which include app stores, hosting companies, and “interpersonal communications service” providers like messaging apps, to scan the private messages of specific users for whom they get a “detection order” from the EU for CSAM and behaviours of “grooming” or the “solicitation of children.”

What’s more, machine vision tools and AI systems will be used to identify texts and images that amount to the latter two categories. These technologies aren’t one hundred percent accurate one hundred percent of the time.

Apple’s proposal, in comparison, sought to autonomously scan users’ messages and iCloud Photos for known examples of CSAM. Apple admitted in August 2021 that it has been scanning iCloud Mail for CSAM since 2019.

“Detection orders” would be issued by individual EU nations. While the Commission claims that these orders would be “targeted and specified,” not much is known about exactly how they will work or what parameters will be used to target users.

Curated targeting carries the risk of marginalizing individuals and groups. On the other hand, targeting large bands of people at once can be highly invasive. What’s more, the proposed rules also stand to threaten the efficacy of end-to-end encryption by introducing foreign, unrelated software into the encryption pipeline.

“There’s no way to do what the EU proposal seeks to do, other than for governments to read and scan user messages on a massive scale,” Joe Mullin, a senior policy analyst at the digital rights group Electronic Frontier Foundation (EFF), told CNBC. “If it becomes law, the proposal would be a disaster for user privacy not just in the EU but throughout the world.”